ICITY

ICITY

Towards the next generation of smart and visual multimodal sensor networks.

- Current projects, Environmental Monitoring

The context of the project

Smart cameras have found their way in many applications, like fall detection, license plate detection, biometric recognition, etc. When defining a network of spatially distributed smart camera devices, called visual sensor network, processing and fusing of images of a scene from a variety of viewpoints into some form more useful than the individual images, becomes possible. Typical applications are video surveillance, smart homes, meeting rooms, etc. All these applications require high end processing capabilities, offered by high end computers or embedded systems on chip (SoC). Due to the fact that smart cameras in the network mainly use video information, they also require high bandwidth for transmitting the video and consume a lot of power. In this project, we will investigate the abilities of a power efficient and cooperating sensorfused multimodal camera network. The proposed multimodal cooperating nodes combine optical camera information, with IR-images from infrared (IR) sensorarrays and soundmap images from acoustic sensor arrays. Infrared sensor arrays provide the ability to create an IR-radiation image of objects. The IRintensity is proportional to the corresponding temperature and creates a straightforward technique to distinguish between different types of organic and non-organic materials and to distinguish between different types of biological entities (eg. small animals versus human beings). Secondly, an acoustic camera will enable the measurement of both noise intensity and noise direction, creating the ability to detect suspicious activity and hidden motion (eg. car passing behind a wall) in a scene. Thanks to recent developments in the area of low cost micro-electro-mechanical (MEMS) sensors and SoC technology, relatively low cost microphone sensor arrays can be developed for this purpose. Sensor networks, consisting of this sensor fused multimodal camera, will lead to more effective solutions. In contrast with classic camera solutions, the fused-sensor camera’s don’t require to send the full video, audio or IR-information, but will send a tensor based datarepresentation in which each tensor-value represents the fused information. The fact that multiple sensors are integrated in a fused-sensor camera, new low-power and lowdatarate techniques to detect areas of interest and to detect events in realtime become feasible. Interconnecting fused-sensor camera’s as defined in this project, creates new opportunities in healthcare, environmental monitoring and transport while minimising the impact on the growing carbon-footprint created by internet-traffic.

The objective of the project

The main objective of this project is to research and develop the next generation of multimodal fused-sensor camera and the next generation of interconnected fused-sensor camera systems, which fuse information from visual, acoustic and infrared sensors. The second objective is to explore new applications and how the benefits of such fused-sensor camera networks for existing applications. We distinguish the following main activities.

- Development of the integrated fused-sensor camera, combining vectorial acoustic camera data, IR-sensor data and Visual camera data. This will be based on current sensor arrays which we have at our lab. Research will be done on the parametrization of the fused-sensor arrays and a study will be done on the reachable performance of the fused-sensors. Our current simulator for acoustic sensor arrays will be extended to deal with other sensor types (video and IR) and used for the theoretical parametrization research. Based on the simulation results, we will define the basic architecture for a first prototype, providing the necessary embedded processing power for the embedded fusion algorithms. The experimental platform will be based on a reconfigurable computing platform (FPGA) on which the sensor arrays (image, microphone, IR) will be attached.

- Development of new data representation methods for the fused-sensor data and development of data fusion techniques and data-fusion algorithms for sensor-arrays. Possible fusion-algorithms correspond with the functionalities to determine the area of interest in an image, to predict events which can not be predicted by a sole sensor, and to activate/force sleep of one or more sensors on the platform for energy saving.

- Development of network architecture for interconnected fused-sensor camera’s, in which we take account of the trade-off between embedded computing capabilities, required buffering memory, and communication requirements. Also here, the simulator will help to identify the parameters and mechanisms related to network facilities, security, synchronization, and data alignment. The best suited communication protocol and corresponding physical transport layer protocol will be implemented, taking the energy efficiency into account to allow future autonomous operation. Next, the distributed processing algorithms will be implemented and the results compared with the simulator. New distributed fusion-algorithms that generate a more accurate or timely result will be developed.

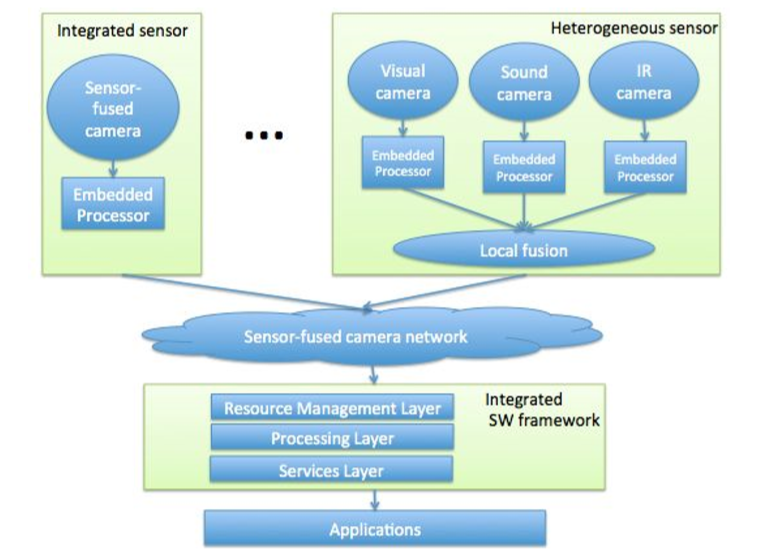

- Integration in an open source framework that integrates in a secure and private way the analyzed information. Third party developers will get the opportunity to utilize the developed hardware and corresponding algorithms and methods. We distinguish three layers in this framework. The lowest layer, there source management layer, will be responsible for the correct communication among the fused sensors in the field, taking into account the possible synchronization problems and network delays. Consequently, after aligning the data, it will be send to the middle layer, being the processing layer. Here, the received information will be processed towards specific purposes like the localisation of noise, the identification of people and objects. At this point of the framework, a plugin mechanism will be provided, to allow developers to add their own algorithms, corresponding to specific applications. Finally, the top layer, called the service layer, will make the results available for other applications by an open application interface.

The figure below represents the architecture of the fused-sensor cameras network (corresponding to objectives 13), together with the software framework (objective 4), which will be used to develop demonstrative applications.